Revolutionary AI vision system helps blind navigate with multi-sensory feedback

A groundbreaking wearable system that assists blind and partially sighted people to navigate their environment effectively has been developed by researchers in China. The system integrates artificial intelligence algorithms with innovative hardware including artificial skin sensors to provide real-time navigation assistance. Testing with visually impaired participants demonstrated significant improvements in obstacle avoidance and task completion in both virtual and real-world settings.

Addressing a long-standing challenge

Scientists have long sought effective solutions to assist visually impaired people (VIPs) with daily navigation tasks. While previous wearable electronic visual assistance systems have shown promise, their widespread adoption has been limited due to usability challenges.

“Despite evolving capabilities, these technologies have not seen widespread adoption within the VIP community,” note the researchers in their paper published in Nature Machine Intelligence. “The limited uptake can be largely attributed to usability challenges, including the cognitive and physical load in use, as well as the intricate training process required before usage.”

The new system, developed by researchers led by Leilei Gu from Shanghai Jiao Tong University, takes a fundamentally different approach by focusing on human-centred design that prioritises both functionality and usability.

Integrating multiple sensory modalities

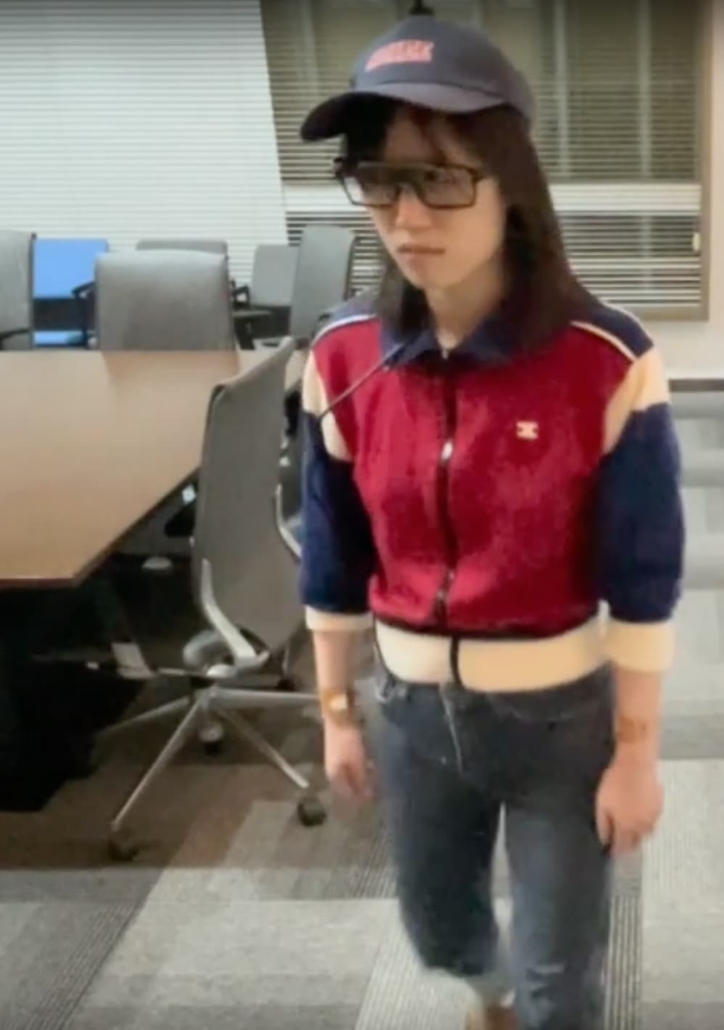

The wearable system combines several key components to assist users in navigating their environment:

- An RGB-D camera mounted in 3D-printed glasses captures visual information and depth data from the environment

- AI algorithms process this information to identify objects and determine obstacle-free routes

- Bone conduction earphones deliver audio cues via spatialized sound to guide direction

- Stretchable artificial skins (A-skins) worn on the wrists provide haptic feedback to detect lateral obstacles

- A virtual reality training platform helps users learn how to interpret the system’s feedback

This multimodal approach allows the system to provide comprehensive information to users while maintaining a lightweight, wearable form factor.

“By blending dense and sparse data processing, fast and slow response rates, and front-side observation capabilities, the system efficiently monitors a broad area with minimal power usage and low latency,” explain the authors.

Human-centred design innovations

The researchers made several key innovations to ensure the system would be usable and effective for VIPs:

The AI algorithms were specifically customized for visual assistance scenarios, focusing on recognizing the 21 most important objects that users would encounter while maintaining high recognition rates from various angles and distances.

The team developed a dual-method approach for obstacle detection, using both a global threshold and ground interval detection to identify various types of obstacles including hanging, ground-level, and sunken obstacles.

For optimal feedback, the researchers conducted tests with VIPs to determine that spatialized audio cues provided the most effective guidance with minimal cognitive load compared to 3D sounds or verbal instructions.

Real-world testing demonstrates effectiveness

The system was tested with 20 visually impaired participants in various scenarios, including maze navigation, indoor environments with obstacles, and outdoor settings.

After training, participants showed significant improvements in navigation metrics:

- Walking speed increased by 0.1 m/s (28%)

- Navigation time decreased by 24%

- Walking distance reduced by 25%

- Collisions decreased by 67% when using the A-skins

In post-experiment surveys, participants gave the system an average usability score of 79.6 out of 100, placing it in the 85th percentile among commercial and research devices.

Future potential

The researchers believe their system represents a promising step toward practical visual assistance technology for VIPs. Operating at 4 Hz with a power consumption of 6.4W, the system balances efficiency with effectiveness.

“Envisioned as an open-source platform, it welcomes interdisciplinary collaborations to drive its progression, including enhancements in vision models, integrated wearable electronics, insights from neuroscience and personalized generative training environments,” the authors note.

They emphasize that input from a diverse group of VIPs will be crucial for further refinement of navigation aids tailored to specific needs.

The research has significant implications for improving quality of life for the visually impaired, offering an alternative to medical treatments and implanted prostheses that could see broader adoption due to its emphasis on usability and effective training.

Reference

Tang, J., Zhu, Y., Jiang, G., et. al. (2025). Human-centred design and fabrication of a wearable multimodal visual assistance system. Nature Machine Intelligence, 7(April 2025), 627-638. https://doi.org/10.1038/s42256-025-01018-6