AI achieves superhuman accuracy in blood cell classification using generative diffusion models

Researchers have developed CytoDiffusion, a diffusion-based generative classifier that surpasses clinical experts in blood cell image analysis. The system demonstrates remarkable capabilities in anomaly detection, uncertainty quantification and domain adaptation whilst generating synthetic images indistinguishable from real samples.

Published in Nature Machine Intelligence, the work establishes a comprehensive evaluation framework addressing key challenges in clinical AI deployment, including robustness, interpretability and data efficiency.

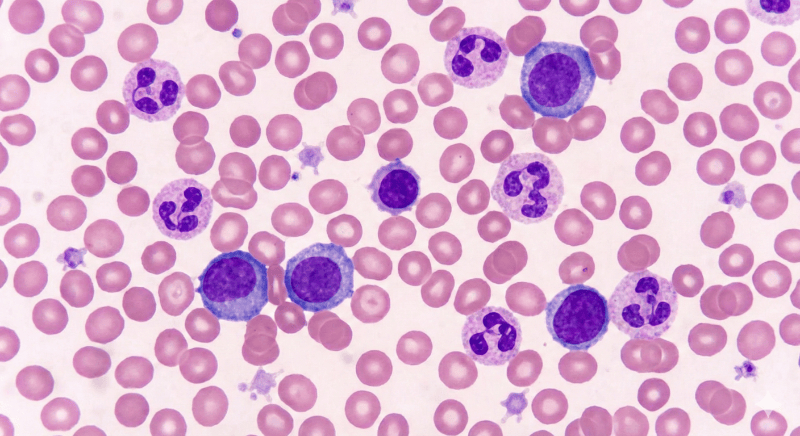

Blood cells. Image for illustrative purposes only.

Revolutionary approach models complete blood cell morphology distribution

Blood cell morphology assessment through light microscopy remains fundamental to haematological diagnostics, yet the task’s inherent complexity – involving subtle morphological variations, biological heterogeneity and technical imaging factors – has long challenged automation efforts. Conventional machine learning approaches using discriminative models struggle with domain shifts, intraclass variability and rare morphological variants, constraining their clinical utility.

The research team, led by scientists at the University of Cambridge and University College London, introduced CytoDiffusion as a fundamentally different approach. Rather than merely learning classification boundaries, the system models the complete distribution of blood cell morphology. “CytoDiffusion is compelled to learn the complete morphological characteristics of each cell type (by modelling the distribution) rather than focusing only on discriminative features near a decision boundary,” the authors explain.

The model’s capabilities were validated through an authenticity test involving ten expert haematologists with up to 34 years of experience. Each specialist evaluated 288 images, attempting to distinguish between real and synthetic samples. The experts achieved an overall accuracy of just 0.523 (95% confidence interval: 0.505-0.542), essentially random performance, indicating that CytoDiffusion’s synthetic images are indistinguishable from genuine blood cell photographs.

Outperforming human experts in uncertainty quantification

CytoDiffusion’s metacognitive abilities represent a significant advancement. The researchers deployed psychometric function estimation to evaluate the model’s uncertainty measures, revealing that its confidence scores approximate an ideal psychophysical observer detecting noisy signals. This suggests the model’s uncertainty is dominated by aleatoric components – inherent data variability – rather than epistemic uncertainty from the model itself.

When comparing individual human expert performance against expert consensus, CytoDiffusion’s confidence measures described the relationship better than human expert confidence. The analysis demonstrated that “CytoDiffusion’s metacognitive abilities are superior to human experts here,” with the model’s uncertainty estimates enabling more effective differentiation between varying expert abilities.

The practical implications are substantial. As the authors note, “Cases with high certainty can be processed automatically, whereas uncertain cases can be flagged for human review.” This transparent quantification of model uncertainty helps build essential trust amongst clinical practitioners whilst providing mechanisms for detecting domain shifts or equipment malfunctions.

Superior anomaly detection and domain robustness

CytoDiffusion excelled at detecting anomalous cell types excluded during training. For the Bodzas dataset, with blasts as the abnormal class, the system achieved sensitivity of 0.905 and specificity of 0.962, with an area under the curve of 0.990. By contrast, the Vision Transformer model suffered from extremely poor sensitivity (0.281), rendering it inadequate for clinical applications where high sensitivity is essential to minimise false negatives.

The model demonstrated remarkable robustness to domain shifts – variations in microscope types, camera systems and staining techniques common across different laboratories. When trained on Raabin-WBC and tested on datasets created with different equipment (Test-B) and entirely different microscopes, cameras and staining methods (LISC), CytoDiffusion achieved state-of-the-art accuracy of 0.985 and 0.854 respectively, consistently outperforming discriminative models.

Data efficiency and clinical explainability

In low-data scenarios – particularly relevant for rare cell types – CytoDiffusion consistently outperformed discriminative models. With just 10 images per class, the advantage was pronounced, demonstrating the model’s learning efficiency. This capability becomes crucial when dividing classes into more granular subclasses encountered in clinical haematological assessments, many of which may only be sparsely represented.

The system provides interpretable explanations through counterfactual heat maps generated directly from the generative process. These visualisations highlight regions requiring change for different classifications, offering immediate insights into morphological distinctions. For example, when examining the transition from monocyte to immature granulocyte, the model indicated differences in cytoplasm characteristics and suggested filling of monocytic vacuoles – capturing typical morphological findings that differentiate these cell types.

Comprehensive evaluation framework for medical AI

Beyond introducing CytoDiffusion, the research establishes a multidimensional evaluation framework encompassing robustness to domain shift, anomaly detection capability, performance in low-data regimes, uncertainty quantification reliability and interpretability. The authors propose that “the research community adopt these evaluation tasks and metrics when assessing new models for blood cell image classification.”

The work was supported by the Trinity Challenge, Wellcome Trust, British Heart Foundation and National Institute for Health and Care Research. The dataset CytoData, comprising 559,808 single-cell images with labeller confidence scores, is publicly available, addressing critical gaps in existing datasets by explicitly modelling artefacts – a key challenge in clinical applications.

Whilst computationally expensive during inference (averaging 1.8 seconds per image), the researchers note that several optimisations could improve efficiency, including code optimisation, model distillation, parallelisation and advancing hardware capabilities.

CytoData online

CytoData is available at: https://www.ebi.ac.uk/biostudies/studies/SBSST2156

Code availability

All code is available via GitHub at:

https://github.com/CambridgeCIA/CytoDiffusion

Reference

Deltadahl, S., Gilbey, J., Van Laer, C., et. al. (2025). Deep generative classification of blood cell morphology. Nature Machine Intelligence, 7, 1791–1803. https://doi.org/10.1038/s42256-025-01122-7