Brain-to-brain synchronicity reveals shared linguistic space during conversations

A groundbreaking study from Princeton University has uncovered new insights into how our brains synchronise during verbal communication, demonstrating that specific words activate mirror patterns of brain activity in both speakers and listeners.

Neuroscientists have long been fascinated by the phenomenon of “brain-to-brain coupling” during human interactions, but until now, it was unclear to what extent this synchronisation was driven by linguistic content versus other factors like body language or tone of voice. New research published in the journal Neuron [1] has shed light on this question, revealing that brain-to-brain coupling during conversations can be modelled by considering the words used and their context.

The study’s findings

The research team, led by neuroscientist Zaid Zada, utilised a novel approach to examine the role of context in driving brain coupling. They collected brain activity data and conversation transcripts from pairs of epilepsy patients during natural conversations. These patients were undergoing intracranial monitoring using electrocorticography for unrelated clinical purposes at the New York University School of Medicine Comprehensive Epilepsy Center.

Zada explained the significance of their findings: “We can see linguistic content emerge word-by-word in the speaker’s brain before they actually articulate what they’re trying to say, and the same linguistic content rapidly reemerges in the listener’s brain after they hear it.”

The importance of context

One of the key insights from this study is the critical role that context plays in verbal communication. Without context, it would be impossible to discern whether a word like “cold” refers to temperature, a personality trait, or a respiratory infection.

Co-senior author Samuel Nastase emphasised this point: “The contextual meaning of words as they occur in a particular sentence, or in a particular conversation, is really important for the way that we understand each other. We wanted to test the importance of context in aligning brain activity between speaker and listener to try to quantify what is shared between brains during conversation.”

Innovative methodology

To extract the context surrounding each word used in the conversations, the researchers employed the large language model GPT-2. This information was then used to train a model that could predict how brain activity changes as information flows from speaker to listener during conversation.

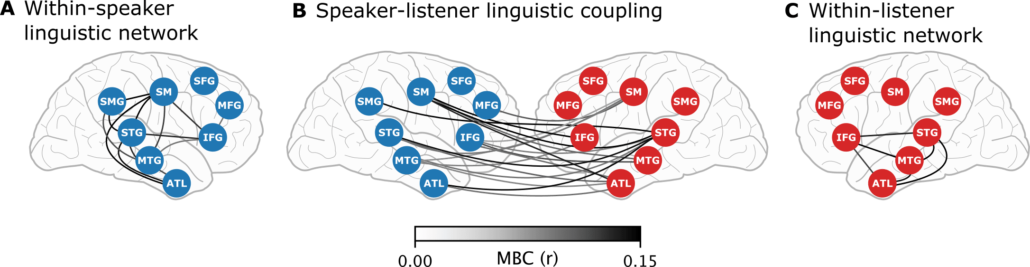

The model revealed that word-specific brain activity peaked in the speaker’s brain approximately 250 milliseconds before they spoke each word. Correspondingly, spikes in brain activity associated with the same words appeared in the listener’s brain about 250 milliseconds after they heard them.

Notably, this context-based approach outperformed previous models in predicting shared patterns of brain activity between speakers and listeners.

The study’s findings underscore the importance of context in verbal communication and demonstrate the potential of using large language models to understand complex linguistic processes in the brain.

Zada commented on the significance of their approach: “Large language models take all these different elements of linguistics like syntax and semantics and represent them in a single high-dimensional vector. We show that this type of unified model is able to outperform other hand-engineered models from linguistics.”

The research team plans to expand on this study by applying their model to other types of brain activity data, such as fMRI, to investigate how different parts of the brain coordinate during conversations.

Nastase outlined the potential for future research: “There’s a lot of exciting future work to be done looking at how different brain areas coordinate with each other at different timescales and with different kinds of content.”

Broader implications for neuroscience and linguistics

This research represents a significant step forward in our understanding of how the brain processes language in real-world contexts. By demonstrating that brain-to-brain coupling can be modelled using contextual linguistic information, the study opens up new avenues for exploring the neural basis of communication.

The findings could have implications for a wide range of fields, including neurolinguistics, cognitive psychology, and even artificial intelligence. As we continue to unravel the complexities of how our brains process and transmit language, we may gain new insights into disorders affecting communication and develop more effective interventions.

This groundbreaking research from Princeton University has illuminated the intricate dance of brain activity that occurs during verbal communication. By revealing how specific words and their contexts are mirrored in the brains of speakers and listeners, the study provides a deeper understanding of the neural mechanisms underlying human interaction.

Reference:

- Zada, Z., et al. (2024). A shared model-based linguistic space for transmitting our thoughts from brain to brain in natural conversations. Neuron. https://doi.org/10.1016/j.neuron.2024.06.025